Privacy Preserving Machine Learning (PPML) is a technique to prevent data leakage in machine learning algorithms. PPML employs many privacy-enhancing strategies to allow multiple input sources to collaboratively train ML models without exposing private or sensitive data. Artificial intelligence (AI) dominates the digital transformation in business today. 86% of CEOs reported that AI was considered mainstream technology in their office in 2021; 91.5% of leading businesses invest in AI on an ongoing basis. Looking ahead, Forbes projects the global AI market value will reach $267 billion by 2027; according to PwC, AI is expected to contribute $15.7 trillion to the global economy by 2030.

As the use of AI grows and expands, so does the threat to information privacy through data leakage. By definition, the more information flows, the more potential there is for the unauthorized transmission of data from within an organization to an external recipient. Once you consider the fact that humanity’s collective data is projected to reach 175 zettabytes — the number 175 followed by 21 zeros – by 2025, preventing those breaches becomes paramount.

However, not all data leakages are created equal. Leaked credit card information threatens the financial future of the individuals and entities who own those cards – but leaked genomic data has the potential to slow down research into life-threatening diseases, cancers, and the next pandemic. And healthcare-related data breaches are more common than ever; attacks on healthcare sector rose by 71% in 2021 alone.

Typical privacy-preserving measures involve either using synthetic data (SD) or anonymized/de-identified data, both of which damage the quality of machine learning (ML) models. Instead, using PETs provides a wide range of tools, including cryptographic techniques such as Fully Homomorphic Encryption and Multiparty Computation, as well as Differential Privacy and Federated Learning.

None of these are a silver bullet, however. The right solution includes a combination of many of the above. The answer to the privacy/AI conflict is privacy-preserving machine learning (PPML) – a step-by-step process to allow ML models to be trained without revealing or decrypting the data inputs. The goal of PPML is to preserve the accuracy of the model without compromising on the quality of the model or on the quality of the underlying data. In this blog, I will define key characteristics of PPML, and explain how Duality uses privacy enhancing technologies (PETs) to facilitate fast and cost-effective PPML for any use case.

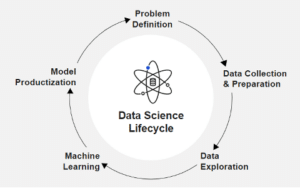

To understand how PETs fit into the Privacy Preserving Machine Learning (PPML) process at Duality, it’s important first to understand the data science lifecycle.

The Data Science Lifecycle consists of 5 distinct stages:

In terms of protecting data privacy, it is essential to ensure that data is being protected at all stages of the data science lifecycle.

Usually, there are two distinct paths for PPML: using differential privacy (DP) to add random “noise” to the data or using other PETs like fully homomorphic encryption (FHE) or multi-party computation (MPC) to analyze data while it is still encrypted.

Differential Privacy is a data aggregation method that adds randomized “noise” to the data; data cannot be reverse engineered to understand the original inputs. While DP is used by Microsoft and open-source libraries to protect privacy in the creation and tuning of ML models, there is a distinct tradeoff when it comes to the data’s reliability. Given that the accuracy of ML models depends on the quality of the data, the amount of noise added to the underlying dataset is inversely proportional to the accuracy and certainty/reliability of that data, and indeed of the entire model.

Using PETs like FHE/MPC protect those data inputs without compromising data quality. FHE, also known as encrypting data in use, allows data to be computed on while it is still encrypted; MPC allows multiple parties to conduct computations on multiple data sets without revealing the underlying data. Together, FHE and MPC can be used to allow many different collaborators to combine their data sources – even sensitive data sources – and to build an encrypted model on the data while that data is still encrypted.

Depending on the nature of the data linking across data sets, other PETs may be utilized as well. Federated Learning (FL) enables statistical analysis or model training on decentralized data sets via a “traveling algorithm” where the model gets “smarter” with every new analysis of the data – but it is primarily used for data sets being linked horizontally and should be used in conjunction with additional PETs which protect the inputs themselves.

Data privacy is often seen as a cryptographer’s field – but to implement Privacy Enhancing Technologies and Privacy Preserving Machine Learning correctly, top data scientists are required to fully understand the many changes which may be applied to data and how they affect the required result.

To understand more about PPML and lattice-based cryptography, watch our latest webinar, “Cryptography Enabled Data Science: Meeting the Demands of Data Driven Enterprise.”