The EU AI Act’s purpose is to “lay down a comprehensive legal framework for the development, marketing, and use of AI in the EU in conformity with EU values, to promote the human-centric and trustworthy AI while ensuring a high level of protection of health, safety and fundamental rights, and support innovation while mitigating harmful effects of AI.”

These regulations are setting the standard for international regulations. But, this act has defined some rather challenging requirements for those with high-risk model types.

- Proof of model efficacy and controls cannot be done with anonymised, synthetic, or non-personal data.

- This means teams will need to source real client data to get their CE stamp of approval to go to market. That’s a big challenge as the data owner will have requirements to follow and must know, not trust, that their data is secured.

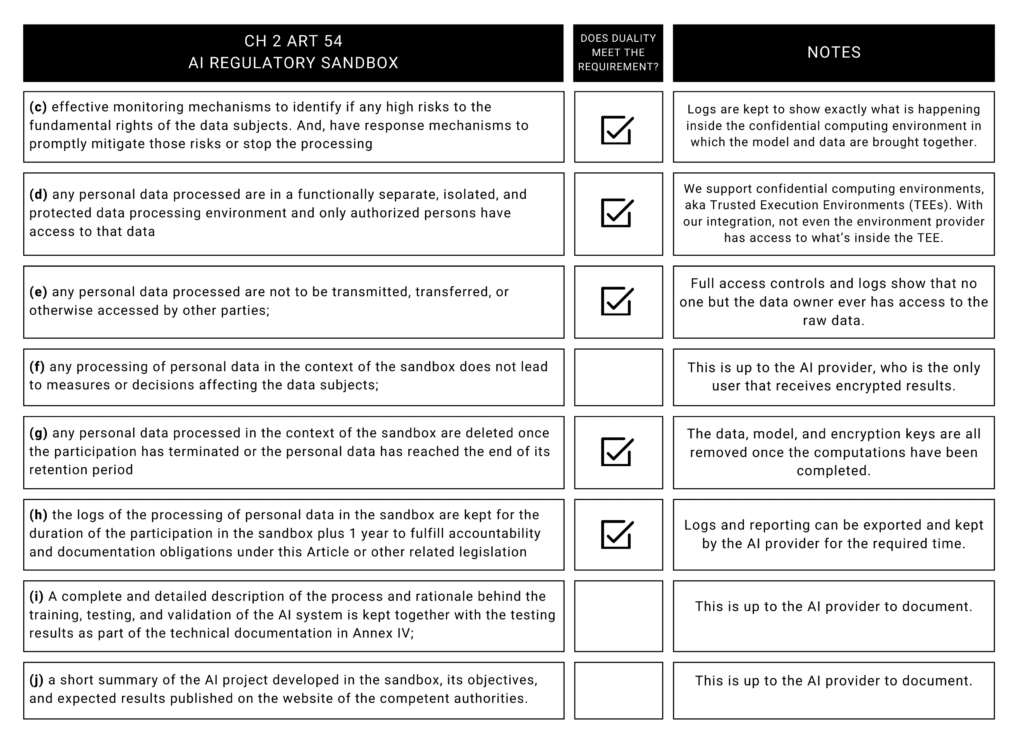

- AI Regulatory Sandbox requirements

- Article 54 goes on to define the requirements of an AI regulatory sandbox that focus on data governance.

Fortunately, Duality’s Secure Collaborative AI provides an easy answer to both of these requirements, and by design meets almost all of the regulations possible by a secure collaboration platform outlined in Article 54. Let’s break down each component and identify whether Duality meets these requirements:

(b) the data processed are necessary for complying with one or more of the requirements referred to in Title III, Chapter 2 where those requirements cannot be effectively fulfilled by processing anonymized, synthetic, or other non-personal data;

- Convincing a 3rd party to provide real-world data without first providing the guarantees below can be a challenge. It can also limit potential data sources given data localization requirements. Fortunately, Duality allows the AI Regulatory Sandbox environment to be configured wherever the data must reside.

(c) there are effective monitoring mechanisms to identify if any high risks to the fundamental rights of the data subjects may arise during the sandbox experimentation as well as response mechanisms to promptly mitigate those risks and, where necessary, stop the processing;

- Met. In our platform, logs are kept to show exactly what is happening inside the confidential computing environment in which the model and data are brought together.

(d) any personal data to be processed in the context of the sandbox are in a functionally separate, isolated, and protected data processing environment under the control of the participants and only authorized persons have access to that data;

- Met. We support confidential computing environments, aka Trusted Execution Environments (TEEs), which satisfy this requirement. With our integration, not even the environment provider has access to what’s inside the TEE.

(e) any personal data processed are not to be transmitted, transferred, or otherwise accessed by other parties;

- Met. Full access controls and logs will show that no one but the data owner ever has access to the raw data.

(f) any processing of personal data in the context of the sandbox does not lead to measures or decisions affecting the data subjects;

- Out of scope for a platform. This is up to the AI provider, who is the only user that receives encrypted results.

(g) any personal data processed in the context of the sandbox are deleted once the participation in the sandbox has terminated or the personal data has reached the end of its retention period;

- Met. The data, model, and encryption keys are all removed once the computations have been completed.

(h) the logs of the processing of personal data in the context of the sandbox are kept for the duration of the participation in the sandbox and 1 year after its termination, solely for the purpose of and only as long as necessary for fulfilling accountability and documentation obligations under this Article or other application Union or Member States legislation;

- Met. Logs and reporting can be exported and kept by the AI provider for the required time.

(i) A complete and detailed description of the process and rationale behind the training, testing, and validation of the AI system is kept together with the testing results as part of the technical documentation in Annex IV;

- Out of scope. This is up to the AI provider to document.

(j) a short summary of the AI project developed in the sandbox, its objectives, and expected results published on the website of the competent authorities.

- Out of scope. This is up to the AI provider to document.

So, if you’re up against these regulatory sandbox requirements, there’s a hard way and an easy way to comply:

1. Hard way – use a confidential computing/secure enclave from any CSP (AWS, GCP, Azure, IBM, etc) and develop all the requirements around it yourself, OR

2. Use Duality Technologies Secure Collaborative AI solution that meets all requirements by default and supports multi-cloud workflows so teams can train/tune/run/inference models where the data must reside.

We’ve built our platform around human-centric and trustworthy AI development. Using privacy-preserving technology, we prioritize your data security and IP, protecting both your model and the data used to build and train it. The solution works by utilizing integration with confidential computing environments, aka Trusted Execution Environments, wherein both the data and models are encrypted before being sent to this environment. No one, not even the providers of the TEE, have access to the environment while models are running. This governance and security means that data owners will be satisfied with the data protections in place and the AI providers will have flexibility to train wherever the data must be stored, knowing that their model IP is also protected.

If you’re interested in Duality Technologies, reach out to our team or get access to our AI sandbox.