- Platform

- Solutions

-

Government

-

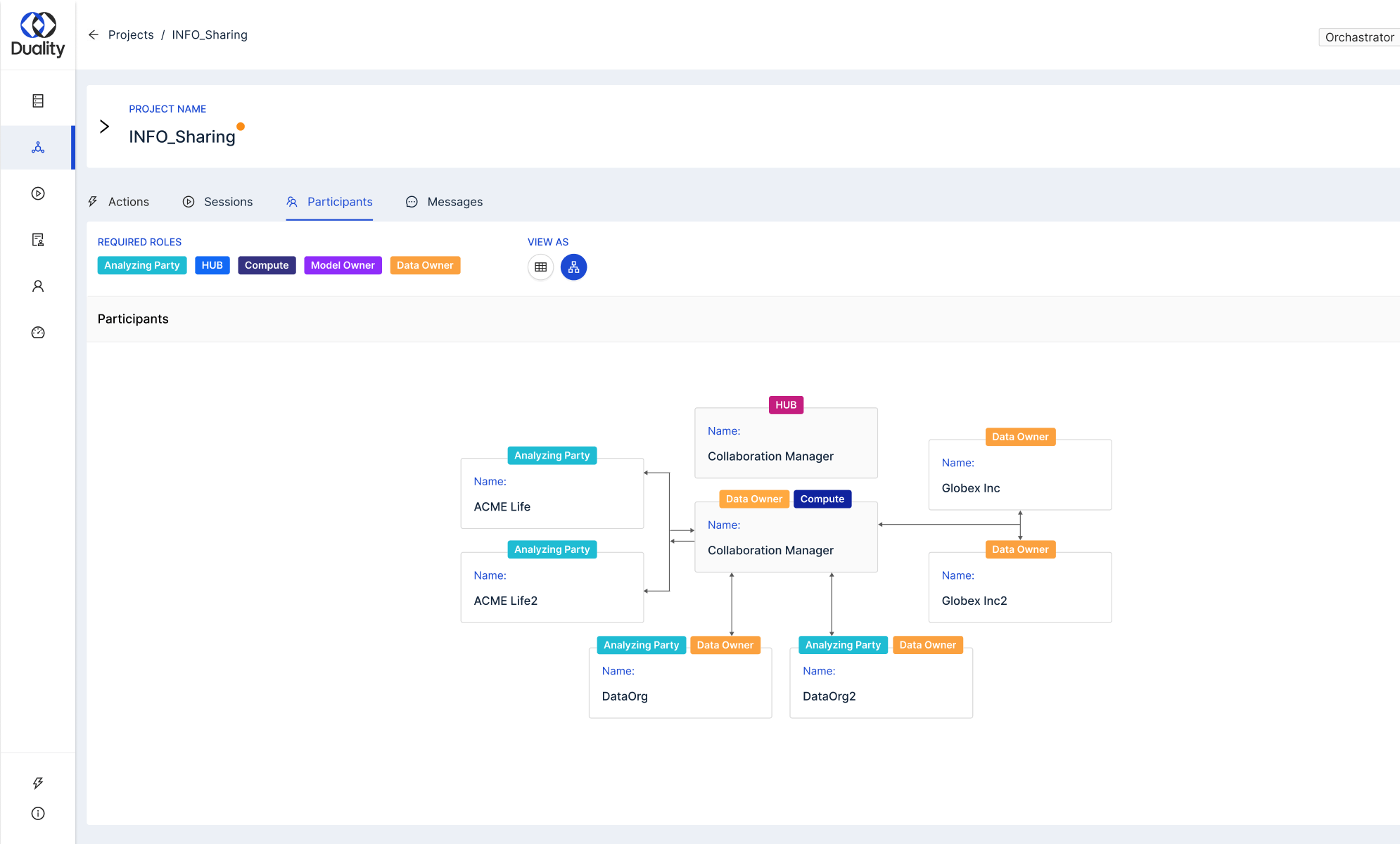

Government Overview

Institute privacy preserving data collaborations

Learn More - Zero Footprint Investigations & Intelligence

- Cross Domain Zero Footprint Investigations & Intelligence

- Cross Departments Analytics

- AI for Strategic & Tactical Defense

- Unlock Military Health Insights

- Secure Semantic Search

- Border Threat Detection

-

-

Healthcare

-

Financial Institutions

-

Marketing

-

Data Service Providers

-

Manufacturing

-

Insurance

-

- Partners

- Resources

- Company