Federated learning is a machine learning approach where multiple parties train a shared model without sharing raw data.

Each participant – a device, system, or organization – trains the model locally and sends only model updates (such as gradients or weights) to a coordinator. The coordinator aggregates these updates into a global model.

This is the umbrella concept.

Private Federated Learning (PFL) is a specialized form that adds stronger cryptography and privacy-enhancing techniques.

It is particularly important in regulated sectors like finance, healthcare, and government, where “no raw data sharing” alone is not enough.

The main difference between a federated architecture and a centralized architecture is where data lives and where training happens.

Centralized Architecture: Data from multiple sources is collected and stored in a single location (for example, a cloud server or internal data lake). Models are trained directly on the combined dataset. This can simplify development and operations, but it increases privacy, security, and compliance exposure because the central repository becomes a high-value target and a governance bottleneck.

Federated Architecture: Data stays with each participant (a device, system, or organization). Instead of moving data to a central environment, the model (or training job) is sent to each data source. Each participant trains locally and sends back model updates (such as weights or gradients) for aggregation into a shared global model. This enables collaboration across distributed or regulated datasets without exposing raw records.

Key Insight: Centralized architectures optimize for simplicity and control, while federated architectures optimize for collaboration under constraints—especially when data cannot move due to regulation, IP sensitivity, classification, or organizational boundaries.

At a high level, a federated training round follows these steps:

This pattern enables a federated model to learn from distributed data while each party keeps control of its own environment.

The key advantage? Organizations and devices can benefit from shared AI models while keeping their intellectual property and sensitive data protected.

Federated learning addresses practical and regulatory constraints that centralized training often cannot.

These advantages explain why federated AI, federated computing, and distributed learning architectures are gaining momentum in sensitive data environments.

While standard federated learning is often described as “privacy-preserving,” it has key limitations that organizations need to consider:

→ Potential Data Leakage

Even when raw data remains local, model updates, such as gradients or weights, can unintentionally reveal information about individual records. Sophisticated attackers may exploit these updates through inference or reconstruction techniques.

→ Risks from Untrusted Participants

Malicious or compromised clients can introduce poisoned updates or backdoors, potentially skewing the global model or undermining its reliability.

→ Limited Cryptographic Protection

Many standard deployments rely primarily on transport-level encryption. Without additional protections, updates can be exposed in cleartext to the central server or operators, leaving sensitive information vulnerable.

→ Compliance and Regulatory Demands

In highly regulated sectors like finance, healthcare, and public services, simply avoiding raw data sharing is rarely sufficient. Regulators increasingly expect robust technical safeguards, formalized privacy guarantees, and strong governance controls.

While standard federated learning enables collaborative model training, it does not fully address privacy, security, or compliance requirements, gaps that Private Federated Learning (PFL) is specifically designed to close.

Private Federated Learning (PFL) extends the basic idea of federated learning with privacy-enhancing technologies (PETs) that protect both data and model updates.

PFL is designed for environments where:

Common techniques in PFL include:

By layering these protections, PFL provides stronger privacy, security, and governance than standard federated learning, without abandoning the distributed training model.

Here is a concise side‑by‑side view that reflects how practitioners think about the two.

| Aspect | Federated Learning (FL) | Private Federated Learning (PFL) |

| Core Goal | Train a shared model without moving raw data | Train a shared model without moving raw data and protect updates |

| Data Handling | Data stays local; updates sent in clear or lightly protected | Data stays local; updates protected with PETs (SMPC, HE, TEEs) |

| Threat Model | Assumes honest or semi‑honest participants | Assumes malicious or curious participants and stronger adversaries |

| Privacy Guarantees | Informal or architecture-level | Formal or stronger guarantees around leakage and reconstruction |

| Regulatory Fit | Helpful but may be insufficient for high‑risk use cases | Designed for strict financial, healthcare, and public sector requirements |

| Typical Use Cases | Edge personalization, basic cross‑silo collaboration | Cross‑institution analytics, regulated data, high‑stakes AI decisions |

| Implementation Complexity | Lower operational and cryptographic complexity | Higher complexity, but stronger controls and assurances |

Federated Learning (FL): Ensures data remains in its original location, rather than being centralized.

Private Federated Learning (PFL): Implements additional protections so that model updates and system operations do not expose sensitive information.

Summary: FL addresses data location, while PFL focuses on maintaining privacy and access control throughout the training process.

Federated approaches appear across multiple domains. PFL becomes critical as sensitivity and regulatory pressure increase.

PFL is typically required when:

In these scenarios, PFL offers the cryptographic and governance layer that allows federated projects to move from pilot to production.

While federated learning preserves privacy and compliance, it faces technical and operational hurdles:

Key Insight: Understanding these challenges helps organizations plan federated learning deployments effectively, balancing performance, privacy, and operational costs.

While the terms sound similar, federated learning and distributed learning address different problems:

Federated learning is evolving rapidly. Key trends include:

The future of federated learning combines privacy, scalability, and real-world impact, making it a cornerstone of enterprise AI strategy.

Starting with federated learning is about planning carefully and moving step by step:

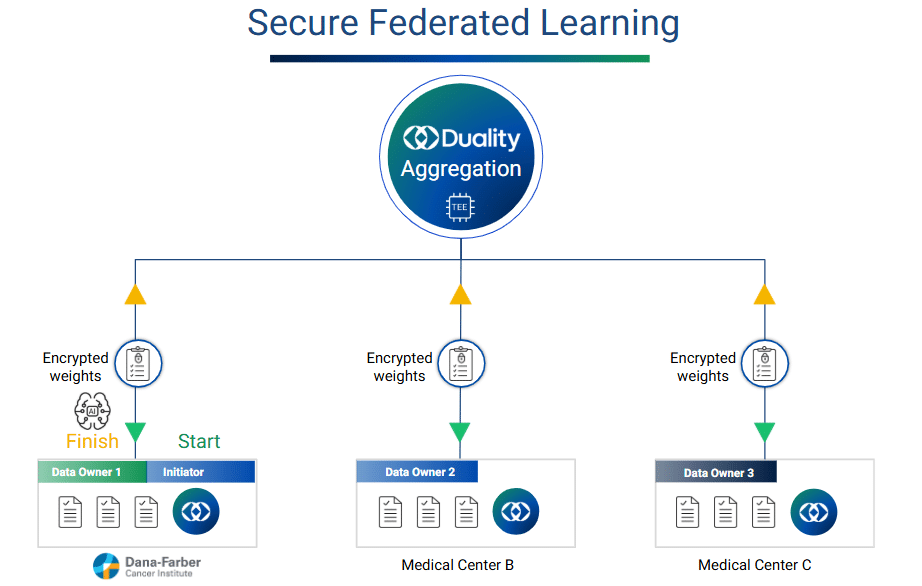

Platforms like Duality address the challenges of federated learning by delivering secure, privacy-preserving solutions. We elevate federated learning by integrating advanced Privacy-Enhancing Technologies (PETs) such as Trusted Execution environment and Fully Homomorphic Encryption directly into AI-driven collaborative workflows.

Duality takes this a step further by enabling users, once the model is trained, to securely run inference on the Duality platform while protecting both the model and the data.

With Duality Platform SFL, enterprises in finance, healthcare, AI research, and beyond can leverage federated learning across multiple organizations without compromising sensitive data, while maintaining full regulatory compliance and enterprise-grade security.