Operate on any data type – including data you can’t access today, using any model type—from traditional machine learning models to advanced neural networks and generative AI models.

Artificial intelligence and machine learning are reshaping industries, but their full potential can only be realized when models are trained and deployed on the best available data—including sensitive datasets that sit outside organizational boundaries. Accessing and using that data is often blocked by privacy, security, and compliance concerns.

Enterprises already struggle to acquire data for analytics, and the challenge is even greater for AI. Vendors face the same roadblocks: clients fear exposing sensitive data, while vendors fear exposing their IP. As a result, both sides are held back from realizing the value of AI.

Duality changes this. With Privacy-Protected AI Collaboration, teams can securely train and deploy models on sensitive, external datasets without moving or exposing data. Vendors can monetize and prove their models earlier in the sales cycle—on real customer data—while keeping proprietary algorithms protected. Clients gain better models powered by richer data, with privacy and compliance guaranteed.

Developing AI models requires access to real data points to perform complex tasks. Duality allows organizations to leverage the most sensitive real data (rather than just synthetic data) for model development while satisfying privacy, security, and legal concerns by default.

Personalize models on sensitive customer data. Streamline model personalization, from natural language processing applications to predictive analytics, without exposing your models’ IP or needing the client to expose their sensitive data.

The existence of data does not mean it’s usable or accessible. Duality’s solutions unlock data from privacy and security concerns, so you can deploy generative AI system models against any sensitive data to drive better insights in less time.

Dualities security measures allow for models to be deployed on specific tasks without risking IP leakage, enabling AI Vendors to extract the utmost value from their hard work without the risk of complex problems related to privacy and AI security.

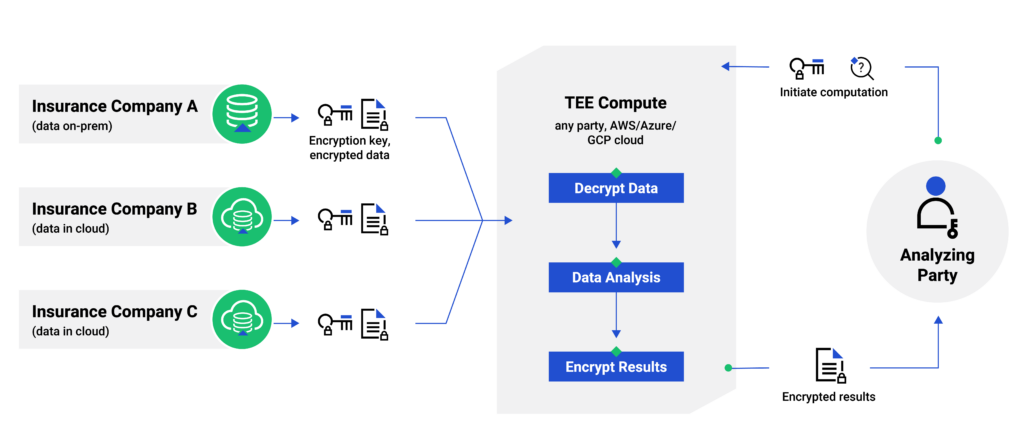

Build and deploy cybercrime, financial crime, and national security models with public and private sector partners, without exposing sensitive data or the models themselves.

Deploy AI models to predict and detect pathologies using medical imaging data linked with Personally Identifiable Information (PII) and Protected Health Information (PHI) without data and AI vulnerabilities.

Link genomic data with other sensitive PII and PHI and deploy models to predict health risks and enable precision medicine and drug discovery.

Build better risk models by combining features across data vendors and financial institutions for enhanced predictive analytics and generated content while ensuring sensitive information and models are protected from security threats.

Test third-party models and generative AI offerings on your real-time data before proceeding with a purchase decision. Ensure your original content data is protected from security risks at all times and speed up time to value.

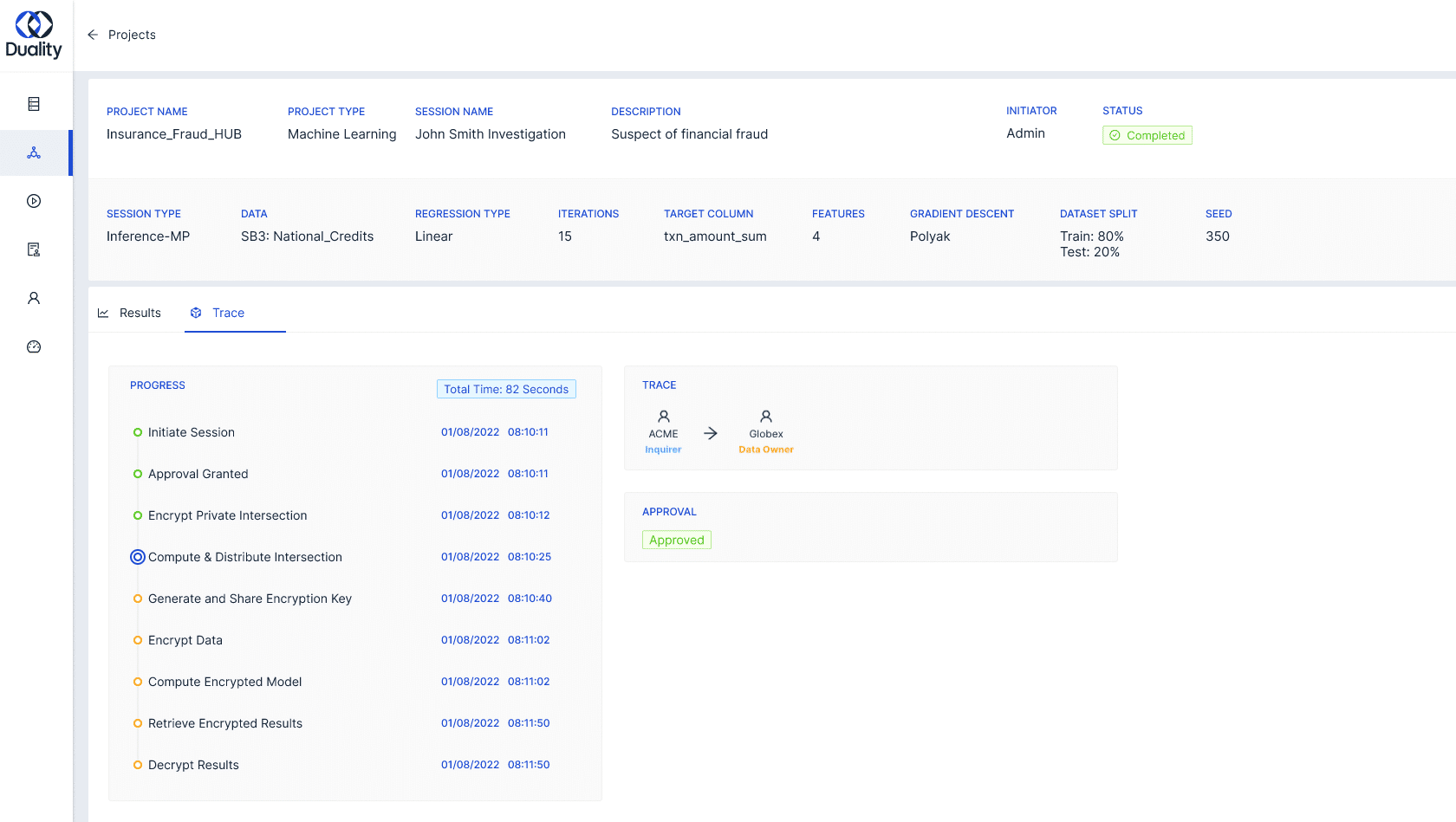

The Duality Platform offers a broad set of privacy technologies and AI applications, supporting the deployment of AI tools and models while ensuring data privacy. Available across environments, including on your premises and major cloud platforms like AWS, GCP, and Azure, organizations use the Duality Platform to collaborate with partners while ensuring the necessary governance and controls to enable privacy-protected collaboration.

Learn how privacy protected AI collaboration supports your growth objectives.