To train a piece of artificial intelligence (AI) is nothing short of an art. It’s a process that involves precise calibrations, scientific understanding, and endless iterations.

Generative models are a category of AI that can generate new data from the same statistical distributions as the training data. Such models are often used to produce synthetic data that can serve as stand-ins for real data during AI training.

What if we want to control some aspect of this data generation?

A conditional GAN is an extension of the classic generative adversarial network (GAN) that adds an extra layer of specificity. Unlike their traditional counterparts, conditional generative models incorporate ‘conditioning information’ that guides the data generation process. For instance, in computer vision applications, a basic generative model might yield a randomly generated image of a face. A conditional generative model, however, can be specifically directed to generate an image of a face with glasses or the face of a particular individual.

This directed capability makes conditional generative adversarial networks (GANs) useful in unique applications, from producing realistic video game scenery to synthesizing novel chemical compounds in pharma research.

A conditional GAN has two players: the generator and the discriminator. The generator creates fake images or data, while the discriminator assesses whether the generated data is real or generated. They work together, each trying to outsmart the other until the generator can produce realistic images that meet the specified conditions.

But it’s not magic—it takes the right setup and careful tuning.

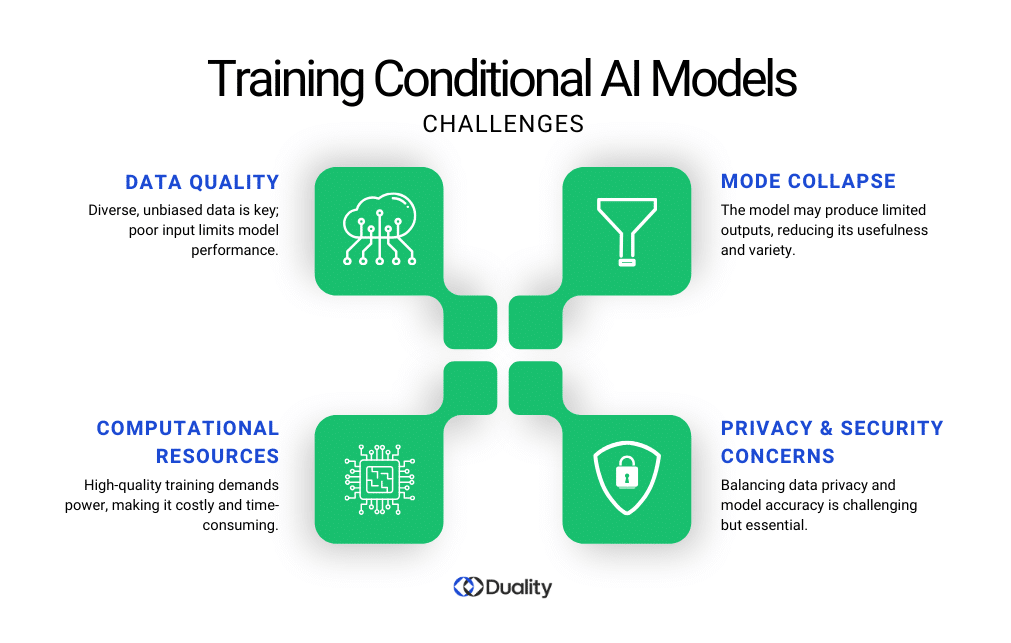

Training a cGAN begins with assembling high-quality input data and training data. This data should be diverse and representative of the conditions you want your model to learn. The broader and more varied the dataset, the better results your model will perform in creating realistic images under different conditions. Properly labeled data also helps the model learn how to create diverse outputs that align with different input conditions.

Data augmentation techniques, such as rotating or flipping images, can help increase the diversity of the training set without needing additional data. This improves the model’s adaptability and robustness.

Labeling the data with class labels provides the model with the guidance it needs to understand the conditions it should use when generating outputs.

With this foundation, your model can start learning the connections between labels and the features in the data.

Duality ensures your data remains private and compliant, unlocking new insights without the risk.

Once your data is ready, it’s time to start the training process.

The generator starts with a noise vector (a random set of numbers) and uses it to create new images based on the provided class labels. The discriminator model, on the other hand, learns to distinguish between real images from the training set and those created by the generator.

During training, the generator aims to improve by making its outputs more convincing, while the discriminator refines its ability to tell the difference between real and fake. This back-and-forth competition, guided by loss functions like binary cross-entropy loss, helps both models get better over time.

Batch normalization and careful tuning of convolutional layers also help maintain stability during training and ensure that the latent space is properly explored.

Evaluating and fine-tuning is where the magic happens. Here’s how to make sure your model’s performance stays on track:

The loss values for both the generator and discriminator should gradually decrease. But if one drops too quickly, it could indicate issues, like mode collapse (when the generator produces limited variations). Slow adjustments can bring better results over time.

Look at the generated images during training. Compare them with real images to see if they’re improving. This visual check can reveal if the model’s losing diversity or detail.

Adjust settings like learning rate, batch size, and latent space dimensions. These tweaks can have a big impact on quality. Small changes often yield large improvements in realistic images.

For projects that need frequent updates, add new data periodically. Feeding in fresh samples can reduce bias and improve the model’s ability to generalize.

Conditional GANs are valuable in various fields, from computer vision to medical data analysis and image synthesis.

Here are some examples:

Understanding these common obstacles can help you anticipate issues and refine your approach:

If you’re training cGAN models in industries dealing with sensitive information, Duality ensures your data remains secure and compliant. Here’s how:

#1 Data Quality and Privacy

Duality enables organizations to use real-world data for training models without compromising privacy. Our platform utilizes cryptographic methods like homomorphic encryption, ensuring that both the data and the model remain protected during the entire training process. This means you can train your models using diverse datasets, even sensitive ones, without the risk of exposure.

#2 Optimizing Computational Resources

With Duality, you don’t need to invest in expensive hardware or cloud services. Whether you are working on-premises or in the cloud, we provide a scalable solution that adapts to your computational needs.

#3 Privacy-Enhanced Collaboration

For organizations handling sensitive data, privacy concerns can be a significant hurdle. Duality’s privacy-preserving technologies allow multiple parties to collaborate on training models without revealing underlying data. This is ideal for industries like healthcare, finance, or law enforcement, where compliance with regulations is necessary.

Conditional generative models are a game-changer for industries with sensitive information. Think healthcare and finance. With Duality, you don’t need synthetic stand-ins. Train your models on real, sensitive data without ever risking exposure. Your data stays safe, your models get smarter, and innovation stays within the lines of privacy compliance.

Imagine training a model to analyze simulated subtraction angiography data without ever exposing real patient records. At Duality, we make this possible by allowing you to train, tune, and deploy your generative network on encrypted data.

Duality doesn’t train models but provides a robust way to secure the data you’re using.

With our platform, you can run complex queries, develop deep learning models, and extract insights while keeping everything secure. Whether you’re working with point clouds, original images, or statistical models, our tools make it easy to maintain data privacy without sacrificing quality.

Schedule a demo today and discover how Duality can transform your approach to secure and effective AI training.