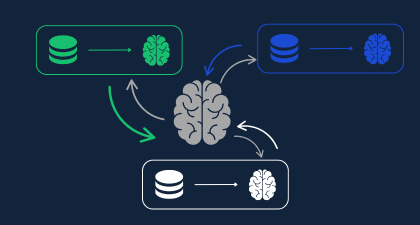

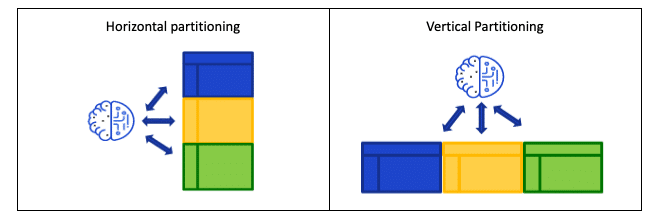

Federated Learning (FL) is a distributed machine learning (ML) technique that enables model training on data from multiple, decentralized servers with local data samples, without exchanging or moving data. This approach ensures that the data remains in its original location and is not exposed to any other parties.

Another characteristic of FL is that it is typically composed of heterogeneous datasets where the size of the dataset varies from one data owner to another.

In this blog, we will provide different examples of how FL is being used today and its advantages when supporting secured data collaboration projects. From there we will move to discuss the current limitations and advantages, and we will finish by introducing Duality’s Secured Federated Learning (SFL).

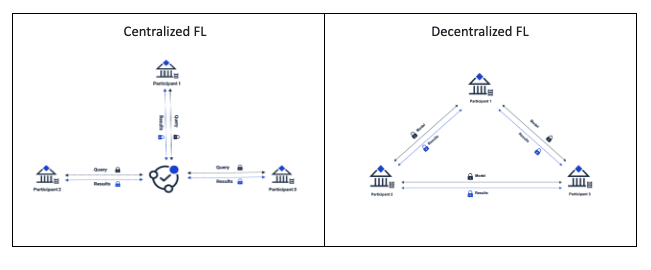

A classic example of FL is in the prediction of autocomplete in keyboards. By analyzing data from a user’s keystrokes, a ML model can be trained to predict the next word or phrase the user is likely to type. In the keyboard example, the model is trained locally on each of the mobile devices of the users, and then aggregated in a centralized compute node, then distributed back to the mobile devices.

Another interesting example of FL can be found within healthcare. FL can be used to securely aggregate model training results of data from multiple sources, to improve the ability in identifying high-risk patients, discover biomarkers that could indicate a certain disease is present, and much more. These collaboration scenarios require data from multiple hospitals and/or clinics, and can be extremely difficult to share without violating privacy laws.

FL can be used in various use cases, and consequently there are specific implementations and adjustments that are made to best accommodate each scenario. At a high level, there are three topics for consideration:

One of the main disadvantages of standard FL is the fact that the model runs in the clear. This means that the data owner who holds the data has visibility to the model being run on their data, thus putting the model at risk. For example, if a company has developed a proprietary model that can be used in the insurance industry, running the model on an insurance company’s real data can expose it and make it susceptible to copying.

Another problem with the use of FL is the potential for data leakage during model training. In FL, each of the nodes (edge devices) performs the computation locally and then sends their partial results to the centralized server. The server performs aggregation of the partial results and distributes the model to the nodes. This process is called an iteration and can be repeated as many times as needed to train the model. The security mechanism of standard FL is based on data aggregation, and therefore it implies statistical security for the private data. Academic studies show that based on the iterations and partial results, one can derive input on the data itself.

To overcome the limitations of FL, Duality introduces Secure Federated Learning (SFL). SFL adds an extra layer of cryptographic encryption to the standard FL, which encrypts the partial results sent between the data owners and the centralized server. This encryption will prevent data leakage and will keep the trained model concealed from the data owners.

Unlike the typical FL approach where security is based on statistical considerations (and therefore relatively easy to hack), Duality added security of a cryptographic secure protocol ensures a clear security measure (typically equivalent to 128 or 256-bit AES).

Duality’s cryptographic SFL approach combines various cryptography techniques and federated learning (FL). Specifically, our solution leverages fully homomorphic encryption (FHE) and secure multiparty computation (sMPC) to create a novel cryptographic protocol that overcomes the performance limitations of FHE.

Our approach at Duality is such that there is no one PET fits all, rather use a wide range of PET technologies and adjust the right PET per case. Thus, we’ve added FL to our technology stack in addition to fully homomorphic encryption and MPC.

Duality supports a wide range of algorithms and frameworks for training and inference in a secured environment. In our latest release, we introduced a new option to train logistic regression models with cryptographic SFL. This is done using a FL approach with an additional layer of cryptographic security, which makes it more secure than a typical federated learning framework.

This design provides rigorous and practical privacy-preserving machine learning for data collaboration in real-world challenges. Our unique strategy is based on theoretical and applied research in cryptography and algorithms. Moreover, it is delivered in a user-friendly platform that allows users to utilize this technique without requiring any cryptographic knowledge.

Federated Learning is a powerful technique that has revolutionized the way machine learning models are trained. However, it comes with its own set of limitations and problems. Duality Technologies’ Secured Federated Learning (SFL) is a solution that addresses these limitations and allows computations to train models on sensitive data worry-free.

Click below to learn more about how PETs like FL are combined to solve business problems.