Synthetic data is information that is artificially manufactured, versus driven by real-world data. It is generated through AI, on-demand, and used for a number of purposes including training autonomous vehicles to drive safer, addressing bias in datasets and ethical AI, shortening development cycles, and speeding up time to PoCs.

In this post you’ll learn what synthetic data is, its benefits and drawbacks, and a few real world examples of how it can be applied.

What Synthetic Data is Not

First, it’s important to clear up some confusion about the term “synthetic data.” The definition has certainly evolved over time. Many people still incorrectly believes it refers to rules-based, randomly generated fake data. However, in this day and age, synthetic data is nothing of the sort.

Synthetic Data Defined

Synthetic Data is a privacy-preserving technology that creates new data from scratch, which is based on the same structure, correlations and time dependencies of the original real data it’s meant to mimic. However, the synthetically generated data has no connection to the original data, and any synthetic individual is impossible to trace back to an original real-life person. This is because rows of synthetic data are generated one-by-one and are not based on any one specific person or entity. Unlike differential privacy or other anonymization techniques, where one adds noise to or modifies the original data, synthetic data is essentially new data generated by a machine learning algorithm. To the naked eye, synthetic data and real data often appear indistinguishable, but the major difference is that real data is collected over time, and synthetic data is produced artificially.

Why all the Hype?

So, what’s the big deal about synthetic data? Gartner predicts that by 2024, 60% of data used to train AI will be synthetically generated. And there’s a good reason too. It can be used for data protection purposes, or to enhance and augment the data you’ve already collected. As Gartner puts it, “synthetic data is the future of AI.”

Anonymous Data vs. Synthetic Data

Traditional anonymization and de-identification techniques are severely limiting. On one hand, they reduce data utility, making it extremely challenging to run accurate analytics on the obfuscated version. On the other hand, they aren’t as private as once believed. There are dozens of examples of individuals being re-identified even after data has been “anonymized” – like the Netflix scandal, for example. Or the study that shows that even if all personally identifiable information has been deleted from a dataset, 87% of Americans can still be re-identified with just three factors: gender, zip code and date of birth! One of the best illustrated stories, however, is this New York Times piece about tracking “anonymized” cell phone records. It’s a frightening article and will likely change the way you use your cell phone moving forward. But I digress. My point here is this: even if data is considered anonymous and meets GDPR or CCPA standards, it likely isn’t so anonymous after all.

Both a Blessing and a Curse

Synthetic data aims to solve this challenge by destroying re-identification risk. In theory, synthetic data eliminates the data utility vs. data privacy challenge. In some cases with synthetic data, organizations can achieve close to the same machine learning accuracy as if they were training off real data, without any of the re-identification risk. This leads to what I refer to as the mixed blessing of synthetic data.

The blessing is this: synthetically generated data is nearly as good as real—and if done correctly, is never re-identifiable, making it safe to share and use. The curse, however, is the other side of this double-edged sword. The data is never re-identifiable. This can lead to serious challenges in solving real-world problems.

For example, if one is combing through a synthetic dataset of individuals who have an underlying condition and develop cancer, and are more likely to react poorly to a drug, a medical researcher may want to contact the medical center or patients to let them know. However, with synthetically generated data, it would be impossible to determine who these similar people are in real life. For a situation like this, homomorphic encryption is probably your best bet- as it would provide the same level of privacy but also allow pre-approved parties to decrypt insights or re-identify the at-risk individuals.

Challenges of Sharing Synthetic Data in the Clear

- Lack of Control – Organizations lose the ability to track where their synthetic data ends up and how it’s used, severely limiting their ability to learn how others are leveraging their data.

- Exposes Competitive Insights – Because it’s so accurate, third parties can use synthetic data, just like aggregate data, to perform analysis and derive business insights beyond the scope of original collaboration intent, thereby exposing corporate strategy. This concern often prevents competitors, like banks, from sharing their synthetic data with one another, even when they desire to collaborate to solve bigger problems, like financial crime.

Use Cases

Synthetically generated data can be used to solve numerous use cases involving both structured and unstructured data.

Self driving cars – Synthetic data can be used to augment data sets. In scenarios where researchers don’t have enough data, synthetic data, of virtually any amount, can be created relatively quickly. And all this extra data can be used to speed up training and reduce costs, ultimately, creating safer computer systems. “For safety-critical applications like autonomous driving, synthetic data fills in the gaps of real-world data by modeling roadway environments, complete with people, traffic lights, empty parking spaces, and more.”

Ethical AI – According to Gartner, by the end of this year (2022), 85% of AI projects will be erroneous due to bias in data and algorithms. As an example, Apple and Goldman Sachs recently caught some heat for giving a husband a higher credit limit than his wife despite the fact that she has a higher credit score.

This occurred because algorithms are created from historical data. And often times, past data reflects discriminatory practices. One way to solve this is by recognizing the bias in data and then supplementing it with the correct level of fairness to offset the bias. Similar to the example above, synthetically generated data can be augmented to fill the gaps to create balance in the data, resulting in AI that is ethical and doesn’t amplify historical discrimination.

Rapid PoCs – Large organizations can speed up the time it takes to initiate and complete proofs of concepts. JPM Chase says it used to take them nine months to kick-off a PoC, and that’s because of all the delays involved with anonymizing data and getting it approved for sharing with vendors.

Imagine a company wanted to test a new AI startup vendor aimed at improving employee retention. To test this vendor, it would have to share its real data with the AI startup vendor, get results, and then analyze the results for accuracy. One way to improve this process while also protecting its data would be to use synthetically generated data. This way the enterprise organization could share data with the AI startup vendor that mimics its real data without actually making its privacy-sensitive HR data available to anyone external. And this process works. JPM Chase accelerated its process from nine months to three weeks, and you can too.

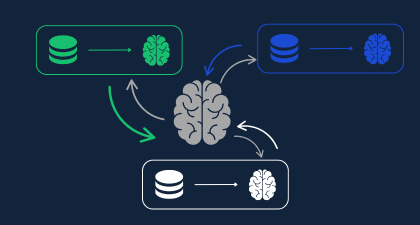

Combine Synthetic Data with other Privacy Preserving Techniques

Historically, organizations and government agencies have been unable to share data externally due to privacy, legal, and classification restrictions. One approach to overcome this challenge involves combining data synthezation and quantum-safe homomorphic encryption.

The solution involves using AI to generate synthetic data, and then adding another layer of protection by leveraging fully homomorphic encryption to empower researchers to analyze the underlying data without ever decrypting it. This policy enforced approach limits external parties to performing only pre-approved analytics on the data while eliminating the need for data owners to ever share (synthetic or raw) data in the clear. This process ensures organizations remain in complete control of their data and improves security posture. This approach also solves the dilemma between competing banks who yearn to work together in a privacy preserving way to stop financial crime.

If you have a challenge that you believe can be solved with synthetic data or privacy enhancing technology but you’re not sure which method or techniques would be best, click the lunch and learn button below to discuss it over your favorite food delivery order.