The world is currently experiencing a major shift toward more autonomous systems, known as agentic AI. These systems are capable of making independent decisions, acting on behalf of users, and even learning from their experiences. Gartner predicts that by 2028, 33% of enterprise software applications will include agentic AI, a sharp increase from less than 1% in 2024.

While agentic AI holds great potential, it also introduces new challenges to data governance, challenges that differ from those posed by traditional AI systems. To ensure data security and compliance with privacy regulations, organizations must rethink their data governance strategies.

What is Agentic AI?

Agentic AI refers to AI that not only responds to user commands but can also take autonomous actions, make decisions, and adapt over time without explicit instructions from users.

For instance, an agentic AI might independently schedule meetings, purchase items, or analyze data to make business decisions, all without needing real-time human input.

Unlike traditional AI, which is typically rule-based and can only perform specific tasks as programmed, agentic AI operates with a higher level of complexity. These systems learn from their environment, evolve based on user interaction, and make decisions that are often not predetermined. This increased autonomy creates significant risks for data governance because these systems might process, combine, or act on data in ways that were not initially anticipated by their designers or users.

What is Data Governance?

The goal of data governance is to establish accountability, consistency, and transparency in how data is handled throughout its lifecycle, from collection to storage and usage. As organizations generate and store vast amounts of data, strong governance frameworks are essential for mitigating risks and maintaining trust.

The Data Governance Challenges of Agentic AI

1. Complex Data Access and Control

Data governance traditionally operates on the premise that organizations can define who has access to which data and when. With agentic AI, however, things become more complicated. These systems require access to a range of data sources to make decisions, and the data they access may change dynamically based on the AI’s ongoing learning process. This fluid access pattern can complicate the ability to monitor and control who is using the data and for what purposes.

For example, an agentic AI system might access sensitive data from a variety of sources, including customer databases, financial records, or personal user information, without clear visibility into how this data is being used. Traditional access control mechanisms, like role-based access control (RBAC), may not be sufficient to ensure that sensitive data is only used by authorized parties in authorized ways.

2. Heightened Privacy Concerns

The autonomous decision-making abilities of agentic AI systems raise significant privacy concerns. Since these systems operate without continuous human oversight, they may process personal and sensitive data in ways that users are not fully aware of or have not consented to. Agentic AI can infer insights from data, combine it across different sources, and even share it in ways that violate privacy regulations like GDPR or HIPAA.

Take, for example, an AI that learns about a user’s personal preferences by analyzing communication patterns, purchasing history, and online activity. In some cases, the AI might infer sensitive information that the user hasn’t explicitly provided, such as their health conditions or financial status. This increased risk of unintentional data exposure means that data governance practices must be updated to ensure user consent is properly obtained and maintained over time.

The stakes are high: GDPR violations can result in fines up to 4% of annual global revenue or €20 million (whichever is higher), while CCPA (California Consumer Privacy Act) penalties can reach $7,500 per intentional violation.

3. Accountability in Autonomous Actions

When an AI system acts autonomously, it’s often unclear who is responsible if something goes wrong. For instance, if an agentic AI makes an incorrect decision that results in financial loss or harm to a user, it may not be immediately obvious whether the blame lies with the AI developer, the AI system itself, or the user.

This lack of clarity creates a governance gap, as traditional models for accountability rely on human decision-makers who can be held responsible for actions taken with data. As AI continues to become more autonomous, it’s essential to establish frameworks that clearly define who is accountable for AI actions and how those actions can be audited or traced.

4. Compliance and Regulatory Risk

As organizations deploy agentic AI systems that handle data across multiple platforms, they face new compliance challenges. Data protection laws such as GDPR and CCPA impose strict requirements on how personal data is collected, stored, and processed. However, these laws were primarily designed with human-operated systems in mind, not autonomous AI.

The challenge arises when agentic AI systems operate across multiple data sources and act on data in unpredictable ways. In these cases, it becomes difficult to ensure that the system remains compliant with all applicable regulations.

For instance, an agentic AI might inadvertently combine data in ways that violate privacy laws or might make decisions that breach regulatory constraints. Traditional governance models may not be equipped to address these dynamic and cross-jurisdictional issues, requiring new compliance frameworks that can adapt to the nature of agentic AI.

Adapting Data Governance for Agentic AI

Dynamic Access Control Policies

Organizations must implement more dynamic and adaptive access control policies, and traditional access control mechanisms must evolve to account for the autonomous nature of agentic AI. This could include implementing real-time monitoring of data usage and AI activities to ensure that access remains appropriate and that any unauthorized access or activity is immediately flagged. It’s also important to design systems that can automatically adjust access based on the context in which data is being used.

Technical solutions include:

- Zero-Knowledge Proofs: Allow AI to verify information without accessing raw data

- Differential Privacy: Add mathematical noise to protect individual privacy while maintaining analytical utility

- Federated Learning: Train AI models on distributed data without centralizing sensitive information

- Homomorphic Encryption: Enable computations on encrypted data without decryption

Enhanced Transparency and Explainability

Users, administrators, and regulators need to be able to understand how an AI system is making its decisions and how data is being used. This can be achieved by implementing AI explainability tools that provide clear, accessible insights into the decision-making process of agentic AI. These tools can help ensure that the AI’s actions align with organizational policies and ethical standards, and they can assist in identifying and correcting any biases or errors that might emerge over time.

Context-Aware Privacy Measures

Organizations must adopt context-aware privacy measures that adapt to the changing nature of data usage. This could include implementing policies that require explicit user consent before data is processed by an AI system, particularly for sensitive data. Additionally, privacy measures should be built into the AI’s operational framework to prevent unauthorized sharing or inference of personal data, even if the AI system doesn’t directly access that data.

Emerging approaches include:

- Constitutional AI: Building ethical principles directly into AI training

- Token-Based Access Control: More granular than traditional RBAC, enabling precise permission management

- AI Sandboxing: Testing agentic AI in controlled environments before production deployment

- Automated “Kill Switches”: Emergency protocols for stopping runaway AI agents

Collaboration Across Disciplines

Addressing the governance challenges of agentic AI requires collaboration among a diverse group of stakeholders. This includes AI developers, data scientists, legal experts, and privacy officers, all of whom must work together to develop governance frameworks that are flexible and scalable. Using interdisciplinary collaboration, organizations can build data governance frameworks that balance the need for innovation with the requirement to protect privacy, security, and compliance.

The Human Element: Building a Culture of Responsible AI

Technology alone won’t solve the governance challenges of agentic AI. To build a culture of responsible AI, organizations must create roles like AI Ethics Officers, AI Auditors, and Data Stewards for AI, supported by ongoing training in AI governance and privacy. This includes specialized certifications and continuous learning for staff.

Cultural shifts are also crucial, such as embedding “privacy by design” into AI development, forming cross-functional governance committees, and encouraging active questioning of AI decisions. These steps ensure human judgment remains integral to AI governance as systems evolve.

The Future of AI Governance

As we move toward 2030, several trends will shape how organizations govern agentic AI:

AI Governing AI

Future systems may use AI to monitor and govern other AI systems, creating multi-layered governance architectures that can respond to threats in real-time.

Blockchain Integration

Decentralized ledgers could provide immutable audit trails for AI decisions, improving accountability and transparency.

Regulatory Evolution

Expect more sophisticated regulations that specifically address autonomous AI systems, potentially including requirements for AI “licenses” or mandatory governance frameworks.

Privacy-Preserving Technologies Become Standard

Technologies like homomorphic encryption and secure multi-party computation will move from experimental to essential, enabling AI to work with sensitive data while maintaining privacy.

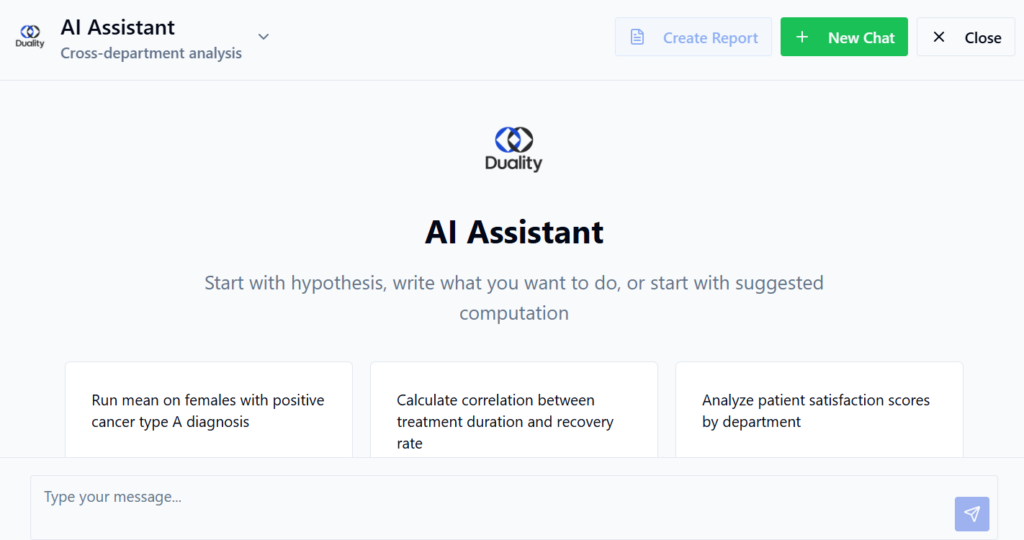

Secure Your AI Future with Duality Technologies

Duality Technologies offers the industry’s most secure privacy-enhanced data collaboration platform, specifically designed to address the governance challenges of AI systems.

Our platform leverages privacy-enhancing technologies (PETs), including:

- Fully Homomorphic Encryption (FHE): Enable AI to process encrypted data without ever exposing it

- Secure Multi-party Computation: Collaborate on sensitive data across organizations while maintaining privacy

- Federated Learning: Train AI models on distributed data without centralizing information

- Differential Privacy: Protect individual privacy while maintaining analytical utility

With Duality, you can:

✓ Enable agentic AI to work with sensitive data while maintaining complete privacy

✓ Ensure regulatory compliance by design, not as an afterthought

✓ Create auditable trails for all AI data interactions

✓ Implement dynamic, context-aware access controls

✓ Accelerate AI deployment with built-in governance guardrails

Join leading organizations in financial services, healthcare, and government who trust Duality to secure their AI initiatives. The future of AI is autonomous. Make sure your governance is ready.