Large Language Models (LLMs) are powerful when applied to public data, but their true transformative value comes from unlocking the knowledge hidden inside organizations’ own sensitive datasets. Government agencies, healthcare institutions, legal firms, and enterprises generate massive volumes of information every day, yet much of it remains siloed, underutilized, or inaccessible due to security and compliance risks.

Secure LLM inference with Retrieval-Augmented Generation (RAG) changes this equation. By combining advanced retrieval with LLM reasoning inside a Trusted Execution Environment (TEE), organizations can safely query, analyze, and extract insights from their private records, from procurement contracts to patient histories, without ever exposing them to third parties. This marks a fundamental shift from “searching the web” to securely unlocking the value of your own vaults of data.

What is RAG & Why It’s Relevant Today

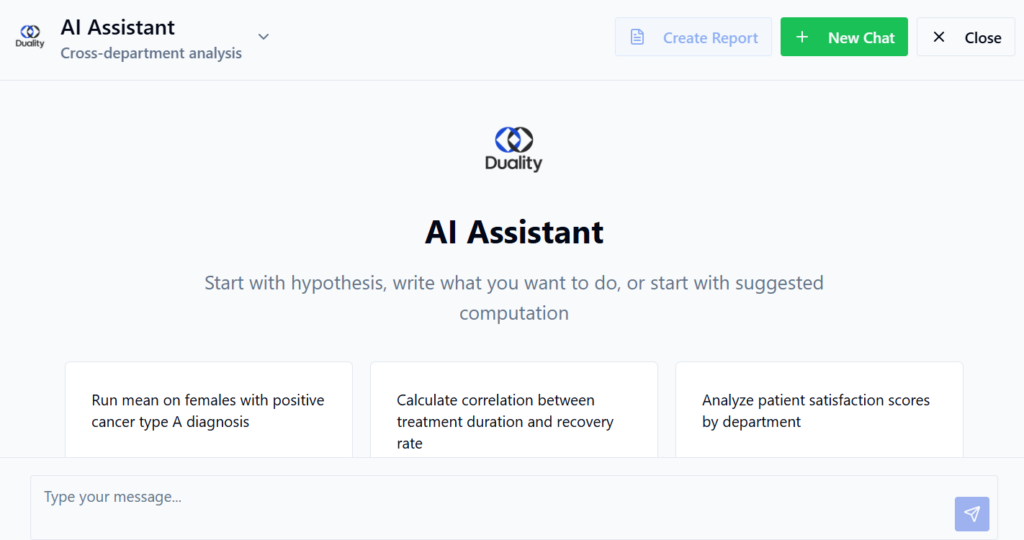

The Problem: Organizations generate enormous amounts of data in different formats every day, making it increasingly difficult to analyze or locate the most relevant information when needed. Have you ever wanted to examine your archives trying to pull together every relevant piece of information on a specific case? Or perhaps you needed to check an agreement with your partners but couldn’t locate the exact document or extract a clear answer from it?

How AI Helps: Retrieval-Augmented Generation (RAG) solves these pains by combining a Large Language Model’s reasoning capabilities with a data retriever, potentially, your own data. Instead of relying solely on what the model learned during training, RAG dynamically fetches relevant documents and injects them into the prompt at query time. This enables organizations to query their internal, sensitive documents, from procurement contracts to case files, from corporate email archives to regulatory filings.

Security Drawbacks of Standard RAG Flows

Most current RAG implementations assume the retriever can access the internet or public APIs. Alternatively they require moving your data to the LLM provider. Once access is granted, it becomes very difficult to control what is being done with your data, how it might be used or even who will use it. This creates serious security and compliance risks, especially for industries with regulated or sensitive data, such as healthcare, governments, finance, and legal, where documents may not leave your controlled environment.

The Value of Secure RAG

A secure RAG solution comes to solve this challenge by protecting your data throughout the entire process, ensuring that both retrieval and inference happen inside a trusted, safeguarded environment.

Secure RAG changes the equation. By keeping the entire flow, from retrieval to inference, inside a trusted enclave, it eliminates uncontrolled access, preserves compliance, and transforms weeks of oversight or contract analysis into seconds. This shift not only reduces risk but also enables new models of collaboration across ministries, agencies, or enterprises, unlocking insights that were previously hidden by data silos and manual effort.

What is a Trusted Execution Environment? A Trusted Execution Environment (TEE) is a hardware-based security feature that creates a protected area in a server’s memory, inaccessible to the operating system, administrators, or external actors. TEEs are offered by all major cloud providers, and they ensure that sensitive data and computations cannot be observed or tampered with while being processed. For Secure RAG, the TEE provides the foundation that allows retrieval, inference, and policy checks to run securely without exposing data to third parties.

Public Sector Use Case, The AI-Ready Procurement Office

Background: Government procurement involves managing thousands of contracts and supplier agreements across years and in fragmented systems and departments. Oversight requires identifying compliance gaps, ensuring fair terms, maintaining accountability, and making past data accessible for ad-hoc analysis.

Together with the fact that government suppliers data is sensitive and cannot be shared with external tools, analysts are left manually scanning PDFs, using old-fashion search through emails and shared drives, and comparing clauses side-by-side, a process that is time-consuming, error-prone, and resource-intensive.

The Value: Secure RAG empowers procurement offices to instantly analyze supplier compliance across years of records, finding matching reports and suppliers, producing clear summaries while ensuring sensitive information remains protected.

Practical examples of queries include:

- “List all suppliers with late delivery penalties in the past 2 years.”

- “Compare pricing terms across all IT procurement contracts signed last year.”

- “Summarize compliance gaps in health sector supplier contracts.”

- “Retrieve all contracts from Supplier X across all departments in the last 5 years.”

- “Identify suppliers with repeated contract extensions across multiple departments beyond original terms.”

Secure RAG enables dramatically accelerated oversight, improving transparency, and enabling accountability, all without ever risking data exposure. By keeping everything inside a trusted enclave, the agency gains faster insights, stronger compliance, and greater public trust.

Private Sector Use Cases: Additional Examples

Beyond the public sector, secure RAG brings value to multiple industries where sensitive, high-volume data must be leveraged safely.

Legal: Legal firms generate enormous amounts of case-related content. A secure RAG system allows them to effectively utilize this by summarizing precedents similar to an ongoing case while ensuring their data security.

Healthcare: Summarize patient outcomes across institutions, platforms, systems and trials without exposing identifiable health records.

How It’s Done: Secure LLM Inference with RAG Using Trusted Execution Environment

The Secure LLM Inference with RAG is essentially being managed in the parts:

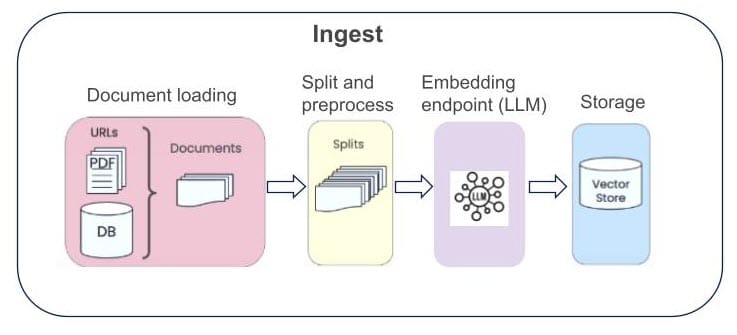

Ingest: The process begins with securely ingesting and indexing private data sources such as contracts, logs, and records inside a secure enclave. Once indexing completes, the data is stored encrypted in a Vector store storage which is external to the TEE, and the relevant pieces are retrieved if needed during computation.

This ensures all sensitive data remains protected from the start and is available in an organized structure for later retrieval.

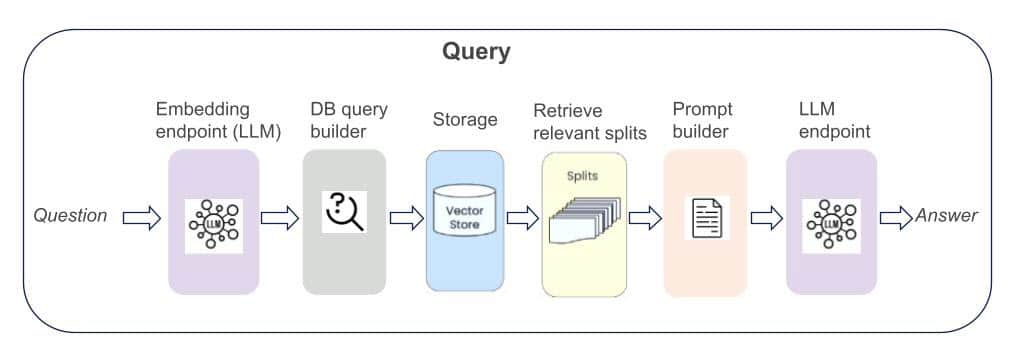

Query: Once the data is indexed, users can query the system through natural language or API calls. The query workflow is composed of three main steps:

- Retriever: The retriever locates and fetches the most relevant internal documents from within the secure enclave. This is typically done through vector embeddings that represent the semantic meaning of each document. When a query is made, the query is converted into an embedding, and similarity scores are computed (for example, using cosine similarity) to determine which documents are most relevant to the user’s request.

- LLM: The large language model then processes these retrieved documents entirely inside the TEE, generating contextually rich insights without exposing raw data. Running the model inside the TEE requires GPU-enabled trusted execution, ensuring both high-performance inference and secure handling of sensitive data.

- Policy Check & Output: Before results leave the enclave, a policy check ensures outputs meet compliance requirements and that only approved information is returned.

Security & Compliance

PET stack: At the core of secure RAG lies a stack of privacy-enhancing technologies, including Trusted Execution Environments (TEE), encrypted vector databases for secure storage and retrieval, workload signing for integrity, and a full audit trail for accountability. These mechanisms ensure data remains protected not just during storage, but throughout processing and inference.

By design, this architecture aligns with strict sector-specific regulations such as GDPR for data protection in Europe, HIPAA for safeguarding health records, and ITAR for sensitive government and defense-related information. In practice, this means organizations can confidently apply AI to their most sensitive datasets without violating compliance requirements.

Secure RAG redefines how organizations can apply AI: not on the open internet, but on the sensitive, high-value data they already own. By combining retrieval, inference, and strict policy controls inside trusted execution environments, it delivers trustworthy insights without compromising security or compliance. Whether applied to government procurement, healthcare research, or legal case analysis, Secure RAG accelerates discovery, enhances accountability, and ensures full control of data use. It transforms organizational knowledge into a strategic advantage, safely and responsibly.